The largest argument I’ve gotten into currently — within the authorized tech house anyway — is over so-called “Agentic AI.” I say, “so-called” as a result of many of the instruments billing themselves as “agentic” don’t bear a lot resemblance to the “Agentic AI” being talked about in each different sector. Shopper AI firms extol the virtues of brokers that autonomously make reservations for you primarily based on scanning your horoscope that morning. “Agentic” is the buzzword of the hour. It’s what will get all of the VCs setting their cash on fireplace investing in AI so excited and the technophiles intrigued. And so authorized tech firms must undertake that vernacular too.

Nonetheless, legal professionals contemplating new merchandise aren’t essentially psyched in regards to the thought of AI utilizing black field decision-making. As a result of the buzzword we use for that on this occupation is “malpractice.”

The excellent news is that, regardless of the moniker, many of the merchandise being described as agentic within the authorized house extra carefully resemble a batch file of professionally manicured chat prompts. Which is sweet! The suppliers behind these elaborate automations have spent plenty of money and time to verify the AI supplies the absolute best outcomes. AI hallucinations are actual, however the best supply of error stays between the keyboard and the chair. Unhealthy prompts result in unhealthy outcomes… and even hallucinated ones. Legal professionals — whether or not in-house or at a agency — are prone to really feel lots higher a few product described as “an expert-curated workflow to maximise AI’s potential whereas defending in opposition to errors” than an “autonomous agent.”

The authorized business will get its cues from the tech suppliers and people suppliers want to have the ability to talk what they’ll provide in phrases that legal professionals are prepared to listen to.

Plat4orm and Lumen Advisory Group simply dropped a report to assist translate technobabble to legalese: From Hours to Outcomes: The Legal Tech Executive Playbook for Value Creation in the AI Era. It’s the primary in a collection of deliberate playbooks, this one providing a strategic information to educate up authorized tech suppliers on how they’ll information their very own shoppers by means of the AI waters. As somebody who interviews tech suppliers on a regular basis, it’s normally clear when an organization is represented by of us like Plat4orm and once they aren’t. This information affords a slice of perception into why.

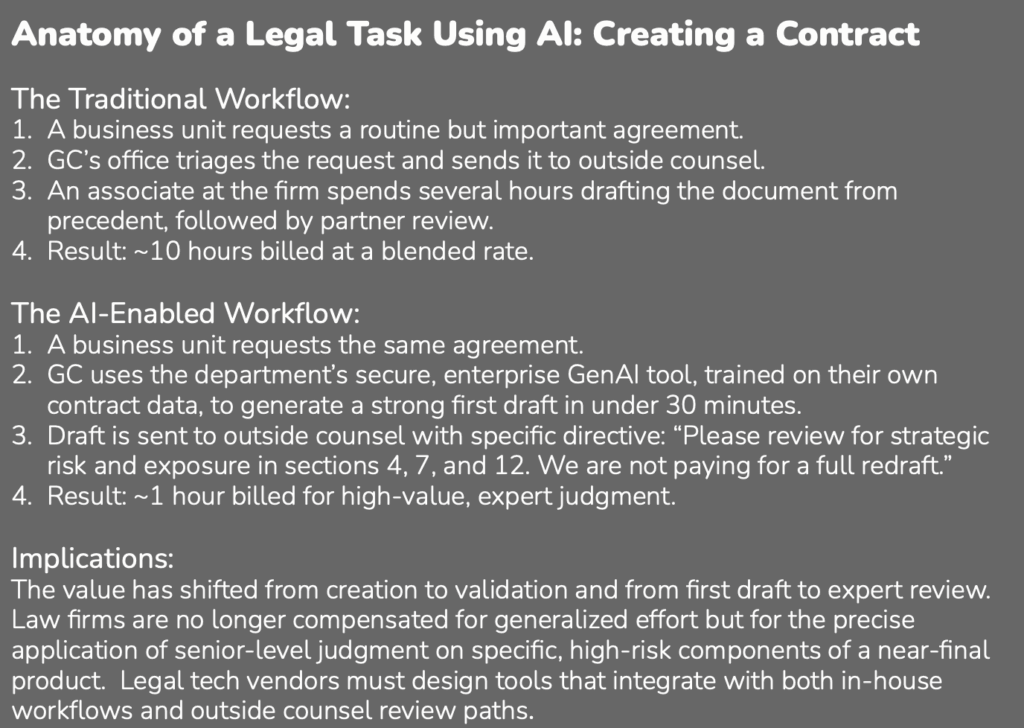

AI suppliers will all the time discuss time-savings, but it surely issues how they describe time financial savings. Silicon Valley tech bros describe time financial savings when it comes to AI “taking up” choices. They gush about how they have constructed one thing to switch people. And, sure, they’ll in all probability drop one thing about it being “agentic” and “autonomous.”

Distinction that with the outline above. Word that phrases like “safe” and “skilled on their very own contract knowledge” present up earlier than anybody mentions time. Word the way it’s pressured that the AI created “a powerful first draft,” implicitly reassuring the lawyer buyer that we’re solely speaking a few draft out of the gate. Authorized recommendation is “high-value” and “professional” — protecting these egos stroked — whereas describing a literal decimation of billable time.

Don’t go away it when it comes to billed time misplaced, concentrate on actual time gained. “Reframe the dialog from ‘hours saved’ to ‘strategic capability unlocked,’” because the playbook explains.

An MIT examine discovered that some 95% of generative AI pilots fail to ship measurable enterprise affect. There’s no single trigger for this, however at the very least a part of it’s the basic confusion amongst legal professionals over what all these items even means. How do you make the plunge and sink assets into AI — and when you do, how do you decide to overcoming the adoption hurdle — while you aren’t even certain you’re making the fitting AI choices? The ensuing inaction finally ends up like a center faculty dance: everybody standing awkwardly alongside the partitions whereas the unruly children attempt to spike the punch with bootleg 4 Lokos whereas nobody’s trying. Folks utilizing ChatGPT for authorized analysis are the 4 Lokos children of this analogy.

What this playbook affords is a accountable chaperone for that dance.

Joe Patrice is a senior editor at Above the Regulation and co-host of Thinking Like A Lawyer. Be happy to email any suggestions, questions, or feedback. Comply with him on Twitter or Bluesky should you’re concerned with regulation, politics, and a wholesome dose of faculty sports activities information. Joe additionally serves as a Managing Director at RPN Executive Search.

Joe Patrice is a senior editor at Above the Regulation and co-host of Thinking Like A Lawyer. Be happy to email any suggestions, questions, or feedback. Comply with him on Twitter or Bluesky should you’re concerned with regulation, politics, and a wholesome dose of faculty sports activities information. Joe additionally serves as a Managing Director at RPN Executive Search.