Sci-Fi Creator: In my ebook, I invented the Torment Nexus as a cautionary story.

Tech Firm: In the end, we’ve created the Torment Nexus from basic sci-fi novel Don’t Create The Torment Nexus.

So goes one of many truly perfect social media posts of our period. Final week, legislation college students on the College of North Carolina Faculty of Legislation had a chance to check out the authorized occupation’s equal of the Torment Nexus with a mock trial placing AI chatbots — particularly ChatGPT, Grok, and Claude — within the position of jurors deciding the destiny of an accused defendant. For all of the faults of the trendy jury system, ought to we change it with an algorithm prepared at the behest of a moron who needs to rewrite history to ensure his private bot injects an apart about “white genocide” into each recipe request?

Because it seems, the reply remains to be no. At the very least as a 1-to-1 substitute.

The experiment centered on a mock theft case pursuant to the make-believe “AI Felony Justice Act of 2035.” Beneath the watchful eye of Professor Joseph Kennedy, serving because the decide, legislation college students placed on the case of Henry Justus, an African American highschool senior charged with theft. The bots acquired a real-time transcript of the proceedings after which, like an unholy episode of Decide Judy: The Singularity Version, the algorithmic jurors deliberated.

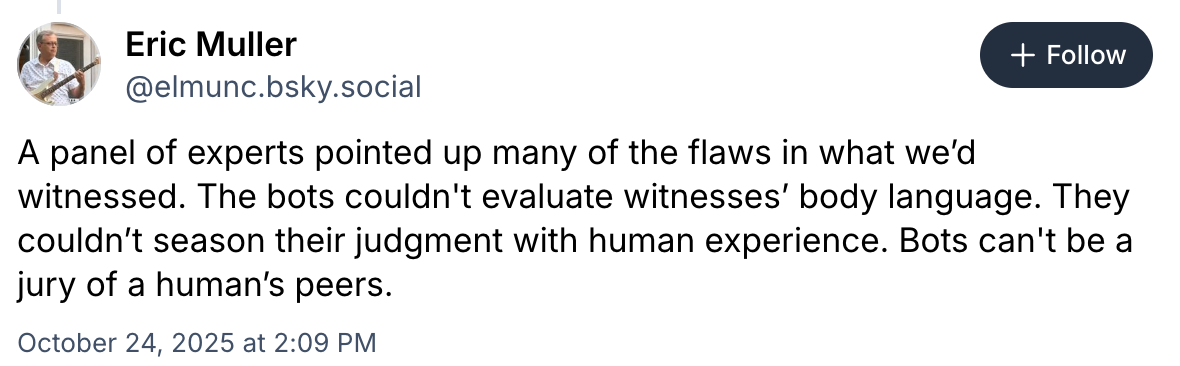

Professor Eric Muller left with some considerations.

The concept robots can remedy the justice system’s bias — and save the federal government $15/day per juror within the course of — is the kind of Silicon Valley pipe dream that generates one other spherical of funding to be heaped on the capex fireplace. Enterprise Capitalists and tech bros could market on “disrupting empathy” or no matter, however we’re simply swapping one bias for one more: human for algorithmic, emotional for opaque, private for company. Up to now, the robots as a complete have confirmed environment friendly vectors of implicit bias, taking the unconscious biases of their designers and the coaching knowledge they’re given and spitting it again with a misleading coat of false neutrality.

Besides Grok, after all, which is continually being tinkered with to higher exhibit express and very aware bias.

And there’s this:

Because it’s Claude, I assume it obtained to “Women and Gents of the–” and simply threw up a “message will exceed the size restrict” warning.

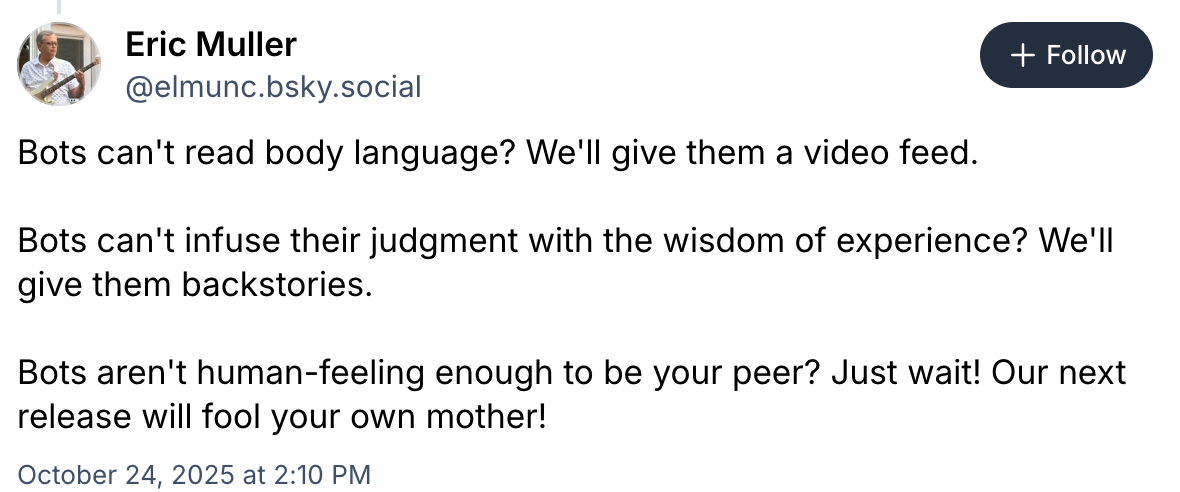

However a machine can’t inform if a witness is mendacity primarily based on their conduct, as a result of it could’t understand that conduct. It could solely inform if there’s an outright contradiction of a particular reality. Possibly it might conjure up some approximation of “doubt” if a witness displays inconsistent sentence construction or one thing, and that’s wonderful in case you suppose the distinction between an harmless man and a sociopath ought to hold on their grasp of Strunk & White. Which, actually… honest. Muller’s deeper concern although, is that the tech trade’s enchancment loss of life drive will take all the current drawbacks and patch the signs with out acknowledging the illness.

The factor with AI — except for its inherently rickety funding mannequin — is that (a) it’s superb on the duties it’s good at, and (b) nearly everybody pretends it’s good on the duties it’s not good at. Can AI change human jurors? No. Irrespective of how dangerous human jurors are, a sycophantic calculator taking part in “phrase roulette” shouldn’t be higher.

That’s to not say there isn’t a job for AI within the jury course of. It goes with out saying that civil litigation provides a lot decrease stakes than felony circumstances and a panel of robots may present a chance to direct restricted juror assets towards the felony circumstances that matter extra. Even inside the felony context, there could possibly be — with accountable design and regulation past what we’ve proper now — a job for AI in permitting jurors to question the proof to keep away from lacking key solutions buried in pages and pages of transcripts with out the good thing about a verbatim search question. Or helping jurors in visualizing the factors of disagreement between the events.

Simply because AI isn’t ready to exchange people within the field doesn’t imply it has nothing to supply although. We simply must preserve experimenting… in mock trials solely.

Joe Patrice is a senior editor at Above the Legislation and co-host of Thinking Like A Lawyer. Be at liberty to email any suggestions, questions, or feedback. Observe him on Twitter or Bluesky in case you’re considering legislation, politics, and a wholesome dose of school sports activities information. Joe additionally serves as a Managing Director at RPN Executive Search.

Joe Patrice is a senior editor at Above the Legislation and co-host of Thinking Like A Lawyer. Be at liberty to email any suggestions, questions, or feedback. Observe him on Twitter or Bluesky in case you’re considering legislation, politics, and a wholesome dose of school sports activities information. Joe additionally serves as a Managing Director at RPN Executive Search.