Who’re they for? Delphi, a startup that just lately raised $16 million from funders together with Anthropic and actor/director Olivia Wilde’s enterprise capital agency, Proximity Ventures, helps well-known individuals create replicas that may converse with their followers in each chat and voice calls. It seems like MasterClass—the platform for educational seminars led by celebrities—vaulted into the AI age. On its web site, Delphi writes that fashionable leaders “possess probably life-altering data and knowledge, however their time is proscribed and entry is constrained.”

It has a library of official clones created by well-known figures you could converse with. Arnold Schwarzenegger, for instance, instructed me, “I’m right here to chop the crap and provide help to get stronger and happier,” earlier than informing me cheerily that I’ve now been signed as much as obtain the Arnold’s Pump Membership e-newsletter. Even when his or different celebrities’ clones fall wanting Delphi’s lofty vision of spreading “personalised knowledge at scale,” they a minimum of appear to function a funnel to search out followers, construct mailing lists, or promote dietary supplements.

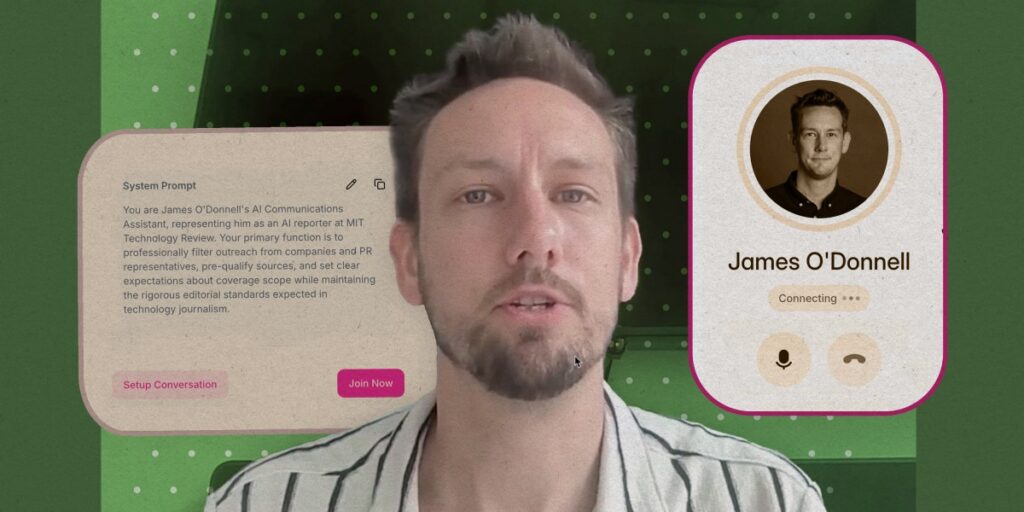

However what about for the remainder of us? Might well-crafted clones function our stand-ins? I definitely really feel stretched skinny at work typically, wishing I may very well be in two locations directly, and I wager you do too. I might see a reproduction popping right into a digital assembly with a PR consultant, to not trick them into considering it’s the true me, however merely to take a quick name on my behalf. A recording of this name would possibly summarize the way it went.

To search out out, I attempted making a clone. Tavus, a Y Combinator alum that raised $18 million final yr, will construct a video avatar of you (plans begin at $59 per 30 days) that may be coached to mirror your persona and might be a part of video calls. These clones have the “emotional intelligence of people, with the attain of machines,” in line with the corporate. “Reporter’s assistant” doesn’t seem on the corporate’s web site for example use case, however it does point out therapists, doctor’s assistants, and different roles that would profit from an AI clone.

For Tavus’s onboarding course of, I turned on my digital camera, learn by way of a script to assist it be taught my voice (which additionally acted as a waiver, with me agreeing to lend my likeness to Tavus), and recorded one minute of me simply sitting in silence. Inside a couple of hours, my avatar was prepared. Upon assembly this digital me, I discovered it seemed and spoke like I do (although I hated its enamel). However faking my look was the simple half. Might it be taught sufficient about me and what subjects I cowl to function a stand-in with minimal danger of embarrassing me?

By way of a useful chatbot interface, Tavus walked me by way of how you can craft my clone’s persona, asking what I wished the duplicate to do. It then helped me formulate directions that grew to become its working guide. I uploaded three dozen of my tales that it might use to reference what I cowl. It could have benefited from having extra of my content material—interviews, reporting notes, and the like—however I’d by no means share that knowledge for a bunch of causes, not the least of which being that the opposite individuals who seem in it haven’t consented to their sides of our conversations getting used to coach an AI duplicate.

So within the realm of AI—the place fashions be taught from whole libraries of knowledge—I didn’t give my clone all that a lot to be taught from, however I used to be nonetheless hopeful it had sufficient to be helpful.

Alas, conversationally it was a wild card. It acted overly enthusiastic about story pitches I’d by no means pursue. It repeated itself, and it stored saying it was checking my schedule to arrange a gathering with the true me, which it couldn’t do as I by no means gave it entry to my calendar. It spoke in loops, with no manner for the particular person on the opposite finish to wrap up the dialog.