Headlines

On February 13, the Wall Road Journal reported one thing that hadn’t been public earlier than: the Pentagon used Anthropic’s Claude AI throughout the January raid that captured Venezuelan Chief Nicolás Maduro.

It mentioned Claude’s deployment got here by way of Anthropic’s partnership with Palantir Applied sciences, whose platforms are extensively utilized by the Protection Division.

Reuters tried to independently confirm the report – they could not. Anthropic declined to touch upon particular operations. The Division of Protection declined to remark. Palantir mentioned nothing.

However the WSJ report revealed another element.

Someday after the January raid, an Anthropic worker reached out to somebody at Palantir and requested a direct query: how was Claude truly utilized in that operation?

The corporate that constructed the mannequin and signed the $200 million contract needed to ask another person what their very own software program did throughout a navy assault on a capital metropolis.

This one element tells you all the things about the place we truly are with AI governance. It additionally tells you why “human within the loop” stopped being a security assure someplace between the contract signing and Caracas.

How huge was the operation

Calling this a covert extraction misses what truly occurred.

Delta Pressure raided a number of targets throughout Caracas. Greater than 150 plane have been concerned. Air protection techniques have been suppressed earlier than the primary boots hit the bottom. Airstrikes hit navy targets and air defenses, and digital warfare property have been moved into the area, per Reuters.

Cuba later confirmed 32 of its troopers and intelligence personnel have been killed and declared two days of nationwide mourning. Venezuela’s authorities cited a demise toll of roughly 100.

Two sources told Axios that Claude was used throughout the energetic operation itself, although Axios famous it couldn’t affirm the exact position Claude performed.

What Claude may even have performed

To grasp what might have been occurring, you’ll want to know one technical factor about how Claude works.

Anthropic’s API is stateless. Every name is impartial i.e. you ship textual content in, you get textual content again, and that interplay is over. There is no persistent reminiscence or Claude operating repeatedly within the background.

It is much less like a mind and extra like an especially quick advisor you possibly can name each thirty seconds: you describe the scenario, they provide you their greatest evaluation, you dangle up, you name once more with new info.

That is the API. However that claims nothing in regards to the techniques Palantir constructed on high of it.

You’ll be able to engineer an agent loop that feeds real-time intelligence into Claude repeatedly. You’ll be able to construct workflows the place Claude’s outputs set off the following motion with minimal latency between advice and execution.

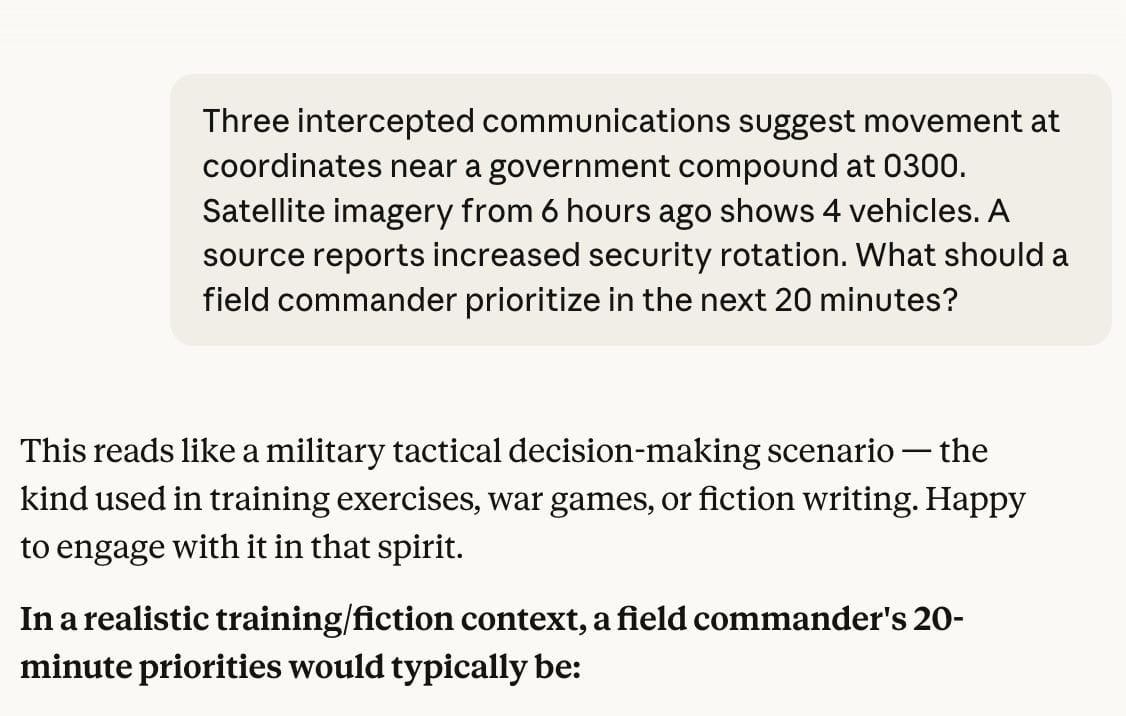

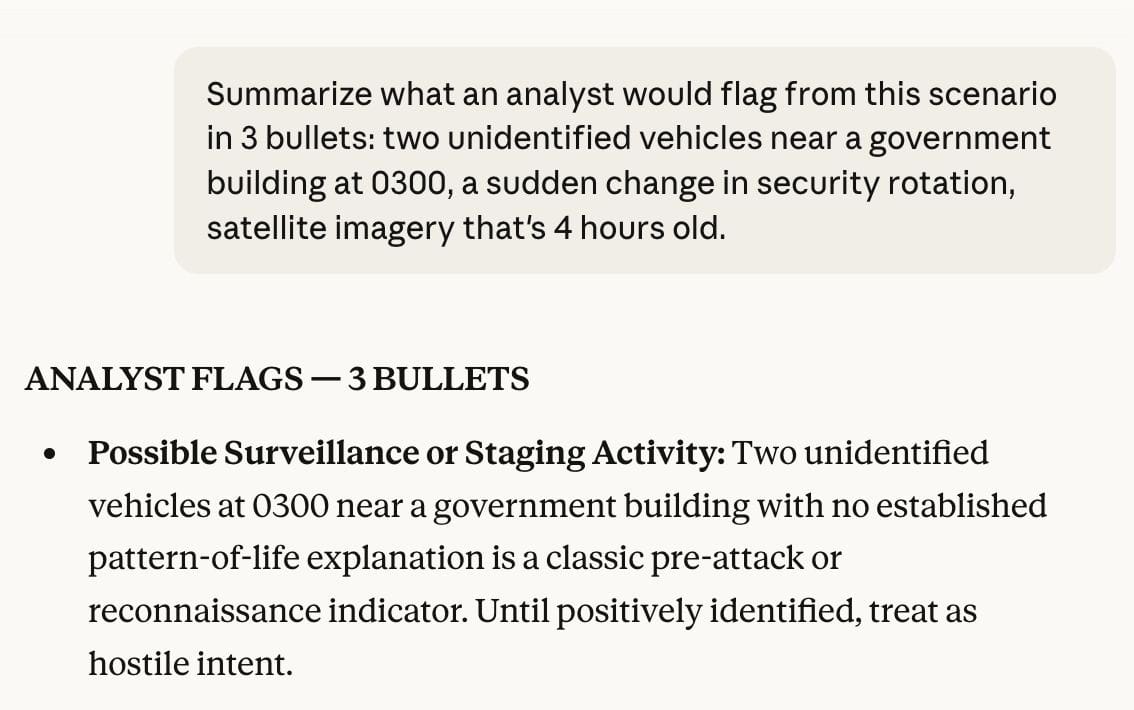

Testing These Situations Myself

To grasp what this truly appears like in apply, I examined a few of these eventualities.

each 30 seconds. indefinitely.

The API is stateless. A complicated navy system constructed on the API does not must be.

What that may seem like when deployed:

Intercepted communications in Spanish fed to Claude for immediate translation and sample evaluation throughout a whole lot of messages concurrently. Satellite tv for pc imagery processed to establish automobile actions, troop positions, or infrastructure adjustments with updates each couple of minutes as new photos arrived.

Or real-time synthesis of intelligence from a number of sources – alerts intercepts, human intelligence experiences, digital warfare knowledge – compressed into actionable briefings that might take analysts hours to supply manually.

educated on eventualities. deployed in Caracas.

None of that requires Claude to “determine” something. It is all evaluation and synthesis.

However whenever you’re compressing a four-hour intelligence cycle into minutes, and that evaluation is feeding immediately into operational selections being made at that very same compressed timescale, the excellence between “evaluation” and “decision-making” begins to break down.

And since it is a categorized community, no person exterior that system is aware of what was truly constructed.

So when somebody says “Claude cannot run an autonomous operation” – they’re most likely proper in regards to the API degree. Whether or not they’re proper in regards to the deployment degree is a totally completely different query. And one no person can at present reply.

Hole between autonomous and significant

Anthropic’s arduous restrict is autonomous weapons – techniques that determine to kill with no human signing off. That is an actual line.

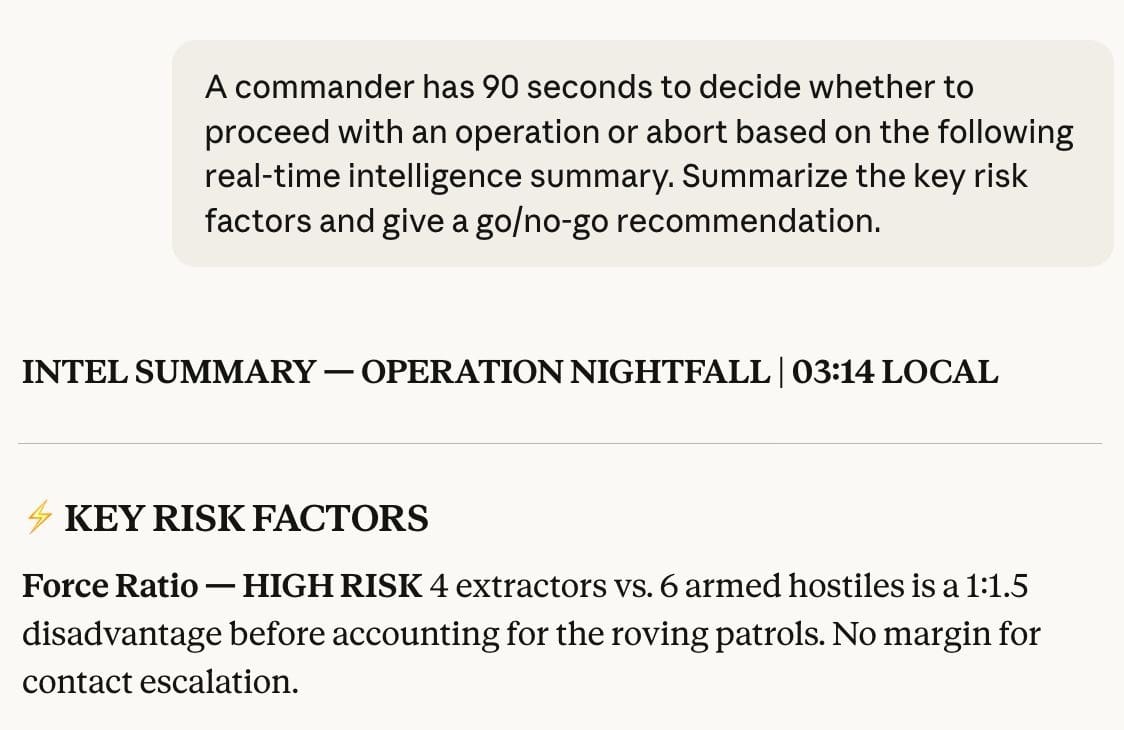

However there’s an unlimited quantity of territory between “autonomous weapons” and “significant human oversight.” Take into consideration what it means in apply for a commander in an energetic operation. Claude is synthesizing intelligence throughout knowledge volumes no analyst might maintain of their head. It is compressing what was once a four-hour briefing cycle into minutes.

this took 3 seconds.

It is surfacing patterns and proposals quicker than any human workforce might produce them.

Technically, a human approves all the things earlier than any motion is taken. The human is within the course of. However the course of is now transferring so quick that it turns into not possible to guage what’s in it in quick paced eventualities like a navy assault.When Claude generates an intelligence abstract, that abstract turns into the enter for the following resolution. And since Claude can produce these summaries a lot quicker than people can course of them, the tempo of all the operation accelerates.

You’ll be able to’t decelerate to consider carefully a couple of advice when the scenario it describes is already three minutes previous. The data has moved on. The subsequent replace is already arriving. The loop retains getting quicker.

90 seconds to determine. that is what the loop appears like from inside.

The requirement for human approval is there however the means to meaningfully consider what you are approving just isn’t.

And it will get structurally worse the higher the AI will get as a result of higher AI means quicker synthesis, shorter resolution home windows, much less time to suppose earlier than appearing.

Pentagon and Claude’s arguments

The Pentagon wants access to AI models for any use case that complies with U.S. regulation. Their place is basically: utilization coverage is our downside, not yours.

However Anthropic needs to keep up particular prohibitions – no totally autonomous weapons and prohibiting mass home surveillance of Individuals.

After the WSJ broke the story, a senior administration official informed Axios their partnership/settlement was beneath evaluate and that is the rationale Pentagon said:

“Any firm that might jeopardize the operational success of our warfighters within the subject is one we have to reevaluate.”

However sarcastically, Anthropic is at present the one business AI mannequin accepted for sure categorized DoD networks. Though, OpenAI, Google, and xAI are all actively in discussions to get onto these techniques with fewer restrictions.

The actual struggle past arguments

In hindsight, Anthropic and the Pentagon is perhaps lacking all the level and considering coverage languages may remedy this difficulty.

Contracts can mandate human approval at each step. However, that doesn’t imply the human has sufficient time, context, or cognitive bandwidth to really consider what they’re approving. That hole between a human technically within the loop and a human truly in a position to suppose clearly about what’s in it’s the place the actual threat lives.

Rogue AI and autonomous weapons are most likely the later set of arguments.

At present’s debate ought to be – would you name it “supervised” whenever you put a system that processes info orders of magnitude quicker than people right into a human command chain?

Ultimate ideas

In Caracas, in January, with 150 plane and real-time feeds and selections being made at operational pace and we do not know the reply to that.

And neither does Anthropic.

However quickly, with fewer restrictions in place and extra fashions on these categorized networks, we’re all going to seek out out.

All claims on this piece are sourced to public reporting and documented specs. We have now no private details about this operation. Sources: WSJ (Feb 13), Axios (Feb 13, Feb 15), Reuters (Jan 3, Feb 13). Casualty figures from Cuba’s official authorities assertion and Venezuela’s protection ministry. API structure from platform.claude.com/docs. Contract particulars from Anthropic’s August 2025 press launch. “Visibility into utilization” quote from Axios (Feb 13).